Useful tools for analyzing OS diagnostic data

February 12, 2022 Leave a comment

Oracle, Linux, AWS, Azure, GCP

February 3, 2022 Leave a comment

Current environment:

$ $ORACLE_HOME/OPatch/opatch lspatches 29585399;OCW RELEASE UPDATE 19.3.0.0.0 (29585399) 29517247;ACFS RELEASE UPDATE 19.3.0.0.0 (29517247) 29517242;Database Release Update : 19.3.0.0.190416 (29517242) 29401763;TOMCAT RELEASE UPDATE 19.0.0.0.0 (29401763)

1. You must use the OPatch utility version 12.2.0.1.28 or later to apply this patch

Download p6880880_190000_Linux-x86-64.zip – OPatch 12.2.0.1.28 for DB 19.0.0.0.0 (Nov 2021), or later.

Replace existing opatch with the new one:

# export ORACLE_HOME=/u01/app/19c/grid # rm -rf $ORACLE_HOME/OPatch # su - grid $ export ORACLE_HOME=/u01/app/19c/grid $ unzip -o /u01/swtmp/p6880880_190000_Linux-x86-64.zip -d $ORACLE_HOME $ $ORACLE_HOME/OPatch/opatch version OPatch Version: 12.2.0.1.28

2. Unzip the patch 33509923 as the Grid home owner:

# su - grid $ cd /u01/swtmp $ unzip p33509923_190000_Linux-x86-64.zip

3. Determine whether any currently installed one-off patches conflict with this patch 33509923 as follows:

# su - grid $ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/swtmp/33509923/33515361 Oracle Home : /u01/app/19c/grid Central Inventory : /u01/app/oraInventory from : /u01/app/19c/grid/oraInst.loc OPatch version : 12.2.0.1.28 OUI version : 12.2.0.7.0 Log file location : /u01/app/19c/grid/cfgtoollogs/opatch/opatch2022-01-31_11-58-55AM_1.log Invoking prereq "checkconflictagainstohwithdetail" Prereq "checkConflictAgainstOHWithDetail" passed. OPatch succeeded. $ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/swtmp/33509923/33529556 $ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/swtmp/33509923/33534448 $ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/swtmp/33509923/33239955 $ $ORACLE_HOME/OPatch/opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir /u01/swtmp/33509923/33575402

In case conflict is detected, stop the patch installation and contact Oracle Support Services, otherwise continue.

4. Stop database instances running on that server.

5. Apply patch using opatchauto:

# cd /u01/swtmp/33509923/ # export ORACLE_HOME=/u01/app/19c/grid/ # export PATH=$ORACLE_HOME/bin:$PATH # $ORACLE_HOME/OPatch/opatchauto apply ... ==Following patches were SUCCESSFULLY applied: Patch: /u01/swtmp/33509923/33239955 Log: /u01/app/19c/grid/cfgtoollogs/opatchauto/core/opatch/opatch2022-02-03_11-33-44AM_1.log Patch: /u01/swtmp/33509923/33515361 Log: /u01/app/19c/grid/cfgtoollogs/opatchauto/core/opatch/opatch2022-02-03_11-33-44AM_1.log Patch: /u01/swtmp/33509923/33529556 Log: /u01/app/19c/grid/cfgtoollogs/opatchauto/core/opatch/opatch2022-02-03_11-33-44AM_1.log Patch: /u01/swtmp/33509923/33534448 Log: /u01/app/19c/grid/cfgtoollogs/opatchauto/core/opatch/opatch2022-02-03_11-33-44AM_1.log Patch: /u01/swtmp/33509923/33575402 Log: /u01/app/19c/grid/cfgtoollogs/opatchauto/core/opatch/opatch2022-02-03_11-33-44AM_1.log

Check current version:

$ $ORACLE_HOME/OPatch/opatch lspatches 33575402;DBWLM RELEASE UPDATE 19.0.0.0.0 (33575402) 33534448;ACFS RELEASE UPDATE 19.14.0.0.0 (33534448) 33529556;OCW RELEASE UPDATE 19.14.0.0.0 (33529556) 33515361;Database Release Update : 19.14.0.0.220118 (33515361) 33239955;TOMCAT RELEASE UPDATE 19.0.0.0.0 (33239955)

6. After finishing patch it is recommened to backup clusterware components:

January 6, 2022 Leave a comment

While installing Oracle software using applyRU:

$ /u01/app/oracle/product/19.3.0/dbhome_1/runInstaller -silent -applyRU /u01/swtmp/32895426/32904851/ -responseFile /opt/rsp/db_19.3_EE_swonlyinstall.rsp -ignorePrereqFailure -waitforcompletion

Got an error:

ERROR: The home is not clean. This home cannot be used since there was a failed OPatch execution in this home. Use a different home to proceed.

If a previous attempt to install Oracle software using applyRU or applyOneOffs option failed, the home becomes unusable. Before retrying the process, you need to clean up the home first:

$ rm -rf /u01/app/oracle/product/19.3.0/dbhome_1/* $ unzip -o /u01/swtmp/LINUX.X64_193000_db_home.zip -d /u01/app/oracle/product/19.3.0/dbhome_1

As a best practice I always have a new Opatch, so need to unzip it again:

$ unzip -o /u01/swtmp/p6880880_190000_Linux-x86-64.zip -d /u01/app/oracle/product/19.3.0/dbhome_1

Rerun the command:

$ /u01/app/oracle/product/19.3.0/dbhome_1/runInstaller -silent -applyRU /u01/swtmp/32895426/32904851/ -responseFile /opt/rsp/db_19.3_EE_swonlyinstall.rsp -ignorePrereqFailure -waitforcompletion Preparing the home to patch... Applying the patch /u01/swtmp/32895426/32904851/...

January 5, 2022 Leave a comment

I have upgraded Oracle GI from 12c to 19c. ASM is up and diskgroups are mounted, but flashgrid-cluster still shows that diskgroups are not mounted on any node:

[root@rac1 ~]# flashgrid-cluster FlashGrid 21.8.67.66809 #707efdc10a212421dbd3737ef8e693ae14c15964 License: Active, Marketplace Licensee: Flashgrid Inc. Support plan: 24x7 ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ FlashGrid running: OK Clocks check: OK Configuration check: OK Network check: OK Querying nodes: rac1, rac2, racq ... Cluster Name: mariGIMR Cluster status: Warning ------------------------------------------------------------- Node Status ASM_Node Storage_Node Quorum_Node Failgroup ------------------------------------------------------------- rac1 Warning Yes Yes No RAC1 rac2 Warning Yes Yes No RAC2 racq Warning No No Yes RACQ ------------------------------------------------------------- ----------------------------------------------------------------------------- GroupName Status Mounted Type TotalMiB FreeMiB OfflineDisks LostDisks Resync ReadLocal Vote ----------------------------------------------------------------------------- GRID Warning No N/A N/A N/A N/A N/A N/A N/A N/A -----------------------------------------------------------------------------

After upgrade, you should restart flashgrid_asm service on all nodes, it does not require downtime:

[root@rac1 ~]# systemctl restart flashgrid_asm [root@rac2 ~]# systemctl restart flashgrid_asm

Check the status again:

... ----------------------------------------------------------------------------- GroupName Status Mounted Type TotalMiB FreeMiB OfflineDisks LostDisks Resync ReadLocal Vote ----------------------------------------------------------------------------- GRID Good AllNodes NORMAL 204800 154336 0 0 No Enabled 3/3 -----------------------------------------------------------------------------

December 9, 2021 Leave a comment

Alert log contains:

WARNING! THE SPECIFIED LOGFILE BLOCKSIZE (512) IS LESS THAN THE MEDIA SECTOR SIZE ON DISK (4096). LOGFILE WRITES ARE NOT SECTOR ALIGNED. THIS MAY LEAD TO DEGRADED PERFORMANCE DUE TO READ-MODIFY-WRITE OPERATIONThe redo log groups have the default block size 512. So as the error mentions, the size of the redo log group member is less than the sector size attribute for the diskgroup.

This is reproducable when disk physical block size is 4096. In AWS environment, for example, r5b instances has this size for attached disks (this may change in future, need to be checked while reading this post).

1. Make sure attached disks has physical size 4096

[root@rac1 ~]# find /sys/block/*/queue -name physical_block_size | while read f ; do echo "$f $(cat $f)" ; done /sys/block/nvme0n1/queue/physical_block_size 4096 /sys/block/nvme1n1/queue/physical_block_size 4096 /sys/block/nvme2n1/queue/physical_block_size 4096 /sys/block/nvme3n1/queue/physical_block_size 4096 /sys/block/nvme4n1/queue/physical_block_size 4096 /sys/block/nvme5n1/queue/physical_block_size 4096

2. Create diskgroup with the sector size 4096

CREATE DISKGROUP "DG4K" NORMAL REDUNDANCY

FAILGROUP "RAC1" DISK '/dev/flashgrid/rac1.xvdbd' NAME "RAC1$XVDBD" SIZE 10240M

FAILGROUP "RAC2" DISK '/dev/flashgrid/rac2.xvdbd' NAME "RAC2$XVDBD" SIZE 10240M

QUORUM FAILGROUP "RACQ" DISK '/dev/flashgrid/racq.xvdbz' NAME "RACQ$XVDBZ"

ATTRIBUTE 'au_size' = '4M', 'compatible.asm' = '19.0.0.0', 'compatible.rdbms' = '19.0.0.0', 'sector_size'='4096';Make sure sector size is 4096 for this diskgroup:

SQL> select name,sector_size from v$asm_diskgroup; NAME SECTOR_SIZE ------------------------------ ----------- DATA 512 GRID 512 DG4K 4096 FRA 512

Check log group block size:

SQL> select group#, blocksize from v$log;

GROUP# BLOCKSIZE

---------- ----------

1 512

2 512

3 512

4 512

Add a new logfile member on DG4K:

SQL> alter database add logfile member '+DG4K' to group 4; Database altered.

Check alert log:

2021-12-09T15:32:04.533204+00:00 WARNING! THE SPECIFIED LOGFILE BLOCKSIZE (512) IS LESS THAN THE MEDIA SECTOR SIZE ON DISK (4096). LOGFILE WRITES ARE NOT SECTOR ALIGNED. THIS MAY LEAD TO DEGRADED PERFORMANCE DUE TO READ-MODIFY-WRITE OPERATIONS. 2021-12-09T15:32:21.080534+00:00 Completed: alter database add logfile member '+DG4K' to group 4

You should create a new redo log group (not member, but group) with block size=4K:

SQL> alter database add logfile group 5 ('+DG4K') size 512M blocksize 4096;

December 9, 2021 Leave a comment

After changing the quorum node instance type, my cluster’s one of the voting file became offline:

[root@rac1 ~]# crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 49400dd2b39a4f12bf3c5fa677c056fe (/dev/flashgrid/rac2.xvdba) [GRID] 2. ONLINE 4a6d94d206104fe6bfbe5435ac7f4586 (/dev/flashgrid/rac1.xvdba) [GRID] 3. OFFLINE faf99f5fd78f4f35bfe833bdd1d22b9a (/dev/flashgrid/racq.xvdba) [GRID] Located 3 voting disk(s).

Find out the ASM disk name which contains mentioned voting file, offline and online it:

SQL> select NAME from v$ASM_DISK where PATH='/dev/flashgrid/racq.xvdba'; NAME ------------------------------ RACQ$XVDBA

Offline the disk:

SQL> alter diskgroup GRID offline quorum disk "RACQ$XVDBA"; Diskgroup altered.

Online again:

SQL> alter diskgroup GRID online quorum disk "RACQ$XVDBA"; Diskgroup altered.

Check the status again:

SQL> !crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 49400dd2b39a4f12bf3c5fa677c056fe (/dev/flashgrid/rac2.xvdba) [GRID] 2. ONLINE 4a6d94d206104fe6bfbe5435ac7f4586 (/dev/flashgrid/rac1.xvdba) [GRID] 3. ONLINE 784f924d23c94f3fbf4287c5c6ef572c (/dev/flashgrid/racq.xvdba) [GRID]

December 8, 2021 Leave a comment

Connecting via ssh to the newly created host causes error:

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY!

Someone could be eavesdropping on you right now (man-in-the-middle attack)!

It is also possible that a host key has just been changed.

The fingerprint for the ECDSA key sent by the remote host is

SHA256:AxfpHOVc8NP2OYPGce92HMa5LADDQj2V98ZKgoQHFGU.

Please contact your system administrator.

Add correct host key in /Users/mari/.ssh/known_hosts to get rid of this message.

Offending ECDSA key in /Users/mari/.ssh/known_hosts:315

ECDSA host key for 52.1.130.91 has changed and you have requested strict checking.

Host key verification failed.I had another server with the same Public IP, so when I connected to the old saver the host identification has been saved in known_hosts. After a while I have removed old server and created a new one and assigned the PIP. The host identification has changed, but old entries were still saved in known_hosts.

Open /Users/mari/.ssh/known_hosts and delete only the line containing mentioned IP (52.1.130.91 in my case), save file and retry the connection.

It should work now.

November 30, 2021 Leave a comment

To migrate all content from +GRID diskgroup to another newly created one, we need to know what is the list of necessary files that are located on it:

Let’s migrate all of them one by one:

1. Locate the Oracle ASM password file:

[grid@rac1 ~]$ asmcmd pwget --asm +GRID/orapwASM

2. Migrate the password file:

[grid@rac1 ~]$ asmcmd pwmove --asm -f +GRID/orapwASM +GRID2/orapwASM moving +GRID/orapwASM -> +GRID2/orapwASM

3. Verify that the file has a new path:

[grid@rac1 ~]$ asmcmd pwget --asm +GRID2/orapwASM

1. Locate the Oracle ASM SPFILE:

[grid@rac1 ~]$ asmcmd spget +GRID/marirac/ASMPARAMETERFILE/registry.253.1088678891

2. Migrate the spfile:

[grid@rac1 ~]$ asmcmd spmove +GRID/marirac/ASMPARAMETERFILE/registry.253.1088678891 +GRID2/marirac/ASMPARAMETERFILE/spfileASM ORA-15032: not all alterations performed ORA-15028: ASM file '+GRID/marirac/ASMPARAMETERFILE/registry.253.1088678891' not dropped; currently being accessed (DBD ERROR: OCIStmtExecute)

The error message can be ignored, the new location will be used after we restart CRS.

3. Verify:

[grid@rac1 ~]$ asmcmd spget +GRID2/marirac/ASMPARAMETERFILE/spfileASM

1. Get the current OCR location:

[grid@rac1 ~]$ ocrcheck -config Oracle Cluster Registry configuration is : Device/File Name : +GRID

2. Move OCR:

[grid@rac1 ~]$ ocrconfig -add +GRID2 PROT-20: Insufficient permission to proceed. Require privileged user [grid@rac1 ~]$ exit logout [root@rac1 ~]# ocrconfig -add +GRID2 [root@rac1 ~]# ocrconfig -delete +GRID

3. Verify:

[root@rac1 ~]# ocrcheck -config Oracle Cluster Registry configuration is : Device/File Name : +GRID2

1. Get the current location:

[root@rac1 ~]# crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 544b7b2dc9f14f8dbf8f5c560a32a95f (/dev/flashgrid/rac2.xvdba) [GRID] 2. ONLINE c4035c7009be4f26bffd663651e4d520 (/dev/flashgrid/rac1.xvdba) [GRID] 3. ONLINE 5737c31731574fa8bf2acc107fbbd364 (/dev/flashgrid/racq.xvdba) [GRID] Located 3 voting disk(s).

2. Move:

[root@rac1 ~]# crsctl replace votedisk +GRID2 Successful addition of voting disk 26221fd4d7334fa8bfc98be1908ee3ef. Successful addition of voting disk 093f9c21b9864f87bfc4853547f05a16. Successful addition of voting disk 9c2a9fd2fc334f7ebfb44c04bdb0cf57. Successful deletion of voting disk 544b7b2dc9f14f8dbf8f5c560a32a95f. Successful deletion of voting disk c4035c7009be4f26bffd663651e4d520. Successful deletion of voting disk 5737c31731574fa8bf2acc107fbbd364. Successfully replaced voting disk group with +GRID2. CRS-4266: Voting file(s) successfully replaced

3. Verify:

[root@rac1 ~]# crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 26221fd4d7334fa8bfc98be1908ee3ef (/dev/flashgrid/rac1.xvdbc) [GRID2] 2. ONLINE 093f9c21b9864f87bfc4853547f05a16 (/dev/flashgrid/rac2.xvdbc) [GRID2] 3. ONLINE 9c2a9fd2fc334f7ebfb44c04bdb0cf57 (/dev/flashgrid/racq.xvdbz) [GRID2] Located 3 voting disk(s).

Please note that having OCR backup on the same location where OCR is located is not a good practice, you should have another disgroup for that. So let’s assume, we have separate DG for that.

1. Check the current location:

[root@rac1 ~]# ocrconfig -showbackup rac2 2021/11/29 17:07:02 +GRID:/marirac/OCRBACKUP/backup00.ocr.276.1089911215 1443639413 rac2 2021/11/25 16:52:08 +GRID:/marirac/OCRBACKUP/backup01.ocr.275.1089564721 1443639413 rac2 2021/11/21 14:13:23 +GRID:/marirac/OCRBACKUP/backup02.ocr.277.1089209597 1443639413 rac2 2021/11/29 17:07:02 +GRID:/marirac/OCRBACKUP/day.ocr.272.1089911223 1443639413 rac1 2021/11/15 15:05:26 +GRID:/marirac/OCRBACKUP/week.ocr.273.1088694327 1443639413 PROT-25: Manual backups for the Oracle Cluster Registry are not available

2. Reconfigure:

[root@rac1 ~]# ocrconfig -backuploc +FRA

There are automatic OCR backups that are taken in the past 4 hours, 8 hours, 12 hours, and in the last day and week. Until this time passes, we can run manual backup for safety:

[root@rac1 ~]# ocrconfig -manualbackup rac2 2021/11/30 12:20:15 +FRA:/marirac/OCRBACKUP/backup_20211130_122015.ocr.257.1089980415 1443639413

3. Verify:

[root@rac1 ~]# ocrconfig -showbackup rac2 2021/11/29 17:07:02 +GRID:/marirac/OCRBACKUP/backup00.ocr.276.1089911215 1443639413 rac2 2021/11/25 16:52:08 +GRID:/marirac/OCRBACKUP/backup01.ocr.275.1089564721 1443639413 rac2 2021/11/21 14:13:23 +GRID:/marirac/OCRBACKUP/backup02.ocr.277.1089209597 1443639413 rac2 2021/11/29 17:07:02 +GRID:/marirac/OCRBACKUP/day.ocr.272.1089911223 1443639413 rac1 2021/11/15 15:05:26 +GRID:/marirac/OCRBACKUP/week.ocr.273.1088694327 1443639413 rac2 2021/11/30 12:20:15 +FRA:/marirac/OCRBACKUP/backup_20211130_122015.ocr.257.1089980415 1443639413

November 26, 2021 Leave a comment

Databases were crashed and alert logs were showing errors:

Fri Nov 12 13:23:39 2021

Process startup failed, error stack:

Errors in file /app/oracle/diag/rdbms/orcl/orcl/trace/orcl_psp0_25852.trc:

ORA-27300: OS system dependent operation:fork failed with status: 11

ORA-27301: OS failure message: Resource temporarily unavailable

ORA-27302: failure occurred at: skgpspawn5

We’ve implemented a procedure to take process list (by ps -ef), so output during that time was the following:

oracle 592 1 0 13:57 ? 00:00:00 [oracle] <defunct> oracle 593 1 0 13:55 ? 00:00:00 [oracle] <defunct> oracle 615 1 0 13:57 ? 00:00:00 [oracle] <defunct> oracle 618 1 0 13:57 ? 00:00:00 [oracle] <defunct> ...

Not only Oracle, but sshd and some other processes were also experiencing the same:

oracle 22335 22331 0 13:52 ? 00:00:00 [ps] <defunct> oracle 22336 22331 0 13:52 ? 00:00:00 [grep] <defunct> oracle 22338 22331 0 13:52 ? 00:00:00 [grep] <defunct> oracle 14389 1 0 13:24 ? 00:00:00 [sshd] <defunct> oracle 15852 1 0 13:23 ? 00:00:00 [sshd] <defunct>

A large amount of Zombie processes, causing applications to fail.

You may find a lot of recommendations about increasing kernel.pid_max, similarly ORA-27300: OS System Dependent Operation:fork Failed With Status: 11 (Doc ID 1546393.1). Of course, you can make this parameter unlimited, but this will not solve the problem, it will just postpone it.

The reason for high number of defunct processes is described here, https://access.redhat.com/solutions/2438581.

The parent process for our defunct processes was systemd (pid=1) and the version of it was systemd-219-19.el7.x86_64.

The solution is to update systemd to the latest version.

November 25, 2021 Leave a comment

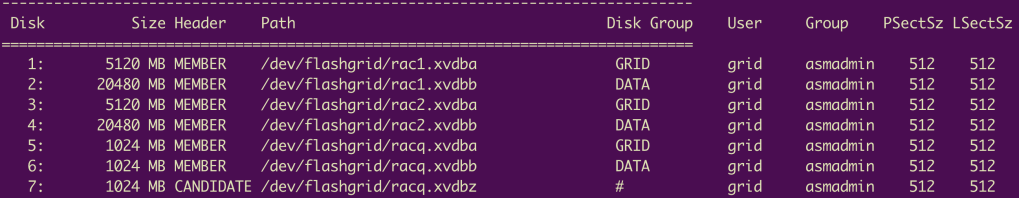

$GRID_HOME/bin/kfod has many usages (kfod -help), one of them is to print disk attributes without connecting to an ASM instance. Even more, you can display these attributes while ASM is not running. Imagine how useful can it be for you, when troubleshooting ASM startup issues.

Let’s display: disk size, header, path, diskgroup name, owner user, owner group, physical sector size, logical sector size.

[root@rac1~]# kfod op=disks status=true disks=all dscvgroup=true diskattr=all

Let’s see if ASM is running during that time:

[root@rac1~]# ps -ef|grep smon root 3716 1 4 12:36 ? 00:00:01 /u01/app/19.3.0/grid/bin/osysmond.bin root 5178 5083 0 12:37 pts/0 00:00:00 grep --color=auto smon

There is no asm_smon_+ASM1, which means ASM is down.