PRVG-11960 : Set user ID bit is not set for file oradism

May 16, 2025 Leave a comment

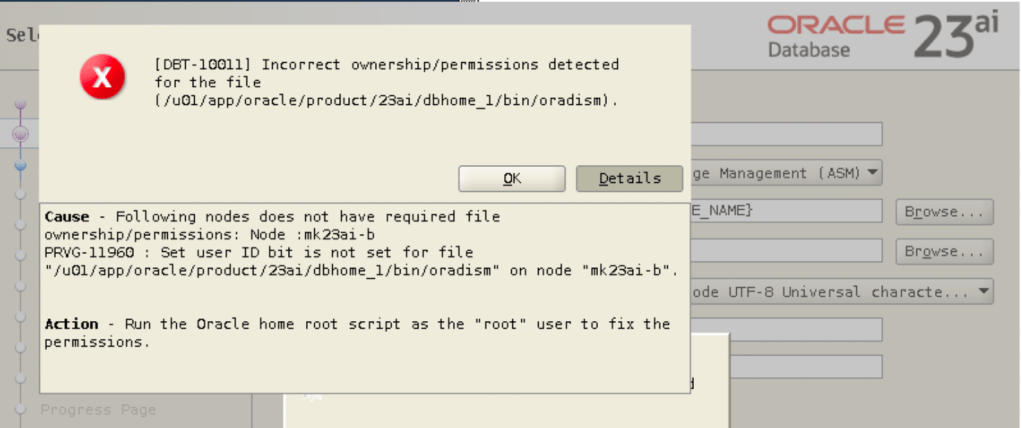

Problem:

While running asmca, I have got the following error:

Cause - Following nodes does not have required file ownership/permissions: Node :mk23ai-b PRVG-11960 : Set user ID bit is not set for file "/u01/app/oracle/product/23ai/dbhome_1/bin/oradism" on node "mk23ai-b". Action - Run the Oracle home root script as the "root" user to fix the permissions.

Troubleshoot:

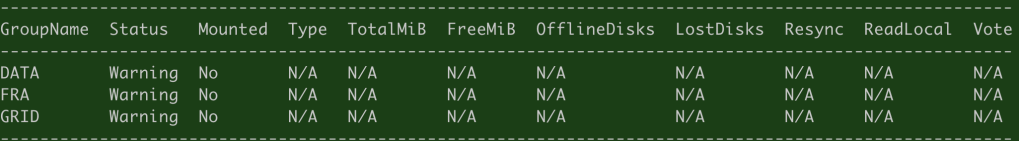

Check the current permissions on the file:

oracle@mk23ai-b:~$ ll /u01/app/oracle/product/23ai/dbhome_1/bin/oradism

-rwxr-x---. 1 root oinstall 1138016 Jul 11 2024 /u01/app/oracle/product/23ai/dbhome_1/bin/oradism

Solution:

The error message includes an action section that states the steps to follow. Connect to the database server as the root user and execute the root.sh script from the RDBMS home directory, since oradism mentioned in the error is located there.

root@mk23ai-b:~# /u01/app/oracle/product/23ai/dbhome_1/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/23ai/dbhome_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Check the file permissions again:

oracle@mk23ai-b:~$ ll /u01/app/oracle/product/23ai/dbhome_1/bin/oradism

-rwsr-x---. 1 root oinstall 1138016 Jul 11 2024 /u01/app/oracle/product/23ai/dbhome_1/bin/oradism

This time it has user ID bit is set.

Normally, when you run a program (an executable file), it runs with your own permissions – meaning it can only do what your user account is allowed to do. But if the setuid bit is set on a file, the program runs with the permissions of the file’s owner, regardless of who is running it.

You can continue using ASMCA this time.